Table of Contents

Kubernetes itself is becoming more popular for many workloads, especially on the cloud or on-premise. On the other hand, there are some emerged solutions for running Kubernetes on edge and IoT devices. Edge computing has the benefit of low latency, low costs, small space, etc. Combining edge devices and cloud-native solutions, we will be able to enjoy many benefits.

- Ease of deployment and management

- Automation

- Modern and cloud-native way of developing

- Observability

- Resilience

- Scalability

Avinton also provides Edge AI Camera solutions for many use-cases which require real-time inference. But what happens if we want to deploy 100+ devices at the edge? If you have like 20+ devices to be deployed on the edge, setting up the devices would be very painful even with the provisioning or auto-installation methods. Also, the management costs of running devices would be larger and larger if you have more devices. By adopting Kubernetes into edge workload, we can reduce many manual tasks. The more devices we have at the edge, the more costs we can reduce by leveraging Kubernetes.

There are some available open-source Kubernetes distributions that are designed for edge or IoT use-cases. We did a test of these distros and compared the features, performance, deployment costs, etc.

- k0s: The Simple, Solid & Certified Kubernetes Distribution

- k3s: The certified Kubernetes distribution built for IoT & Edge computing

- microk8s: Low-ops, minimal production Kubernetes, for devs, cloud, clusters, workstations, Edge and IoT.

- KubeEdge: A Kubernetes Native Edge Computing Framework

Setup

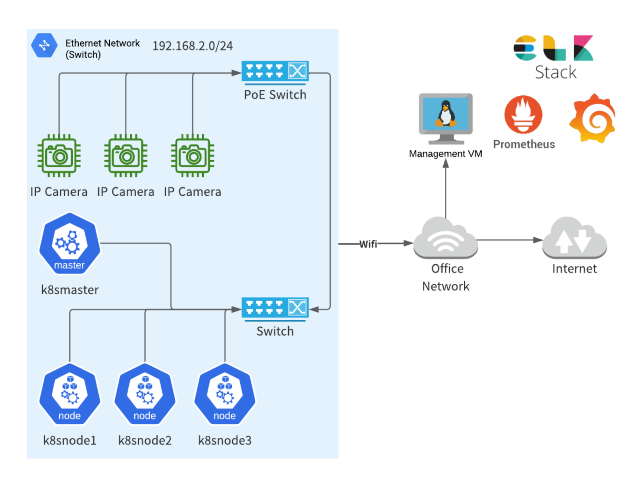

Our device configuration is as follows:

- 3 mini PCs as a worker node

- 1 mini PC as a master node

- 3 IP cameras for inference applications

- Network switch for interconnecting

- PoE switch for IP cameras

- Management VM – Running Prometheus and Grafana instances for monitoring, ELK stack for monitoring

The Mini PC’s spec is as below:

- 4 core CPU

- 4GB Memory

- 60GB Storage

- Ethernet connection

- Wifi available

Analysis

We analyzed and compared those distributions from these perspectives:

- Deployment and management costs

- Edge Network

- Customization

- Resource and performance

- Documentation and community

Deployment and management

k0s and k3s can be deployed very easily and quickly.

They are single binary that contains all of the necessary packages (kubelet, runc, k8s-api, etcd, etc).

Very easy to configure the cluster and uninstall step is also very clean.

microk8s is also a single package of snap but the processes run separately as systemd services.

Configurations need to be applied per each component and restart respective systemd services.

The uninstall command took very long and that is reported by the community too.

kubeedge can be installed by the command keadm which is a similar command to kubeadm.

keadm command can install both cloudcore or edgecore, cloudcore or edgecore run as a systemd process on the control plane or edge. However, the control plane nodes have to be created as native Kubernetes clusters before installing kubeedge.

The latest version support running cloudcore as Kubernetes resources. (See here)

The management cost is larger and installation steps are not as simpler as others.

Edge Network

Most of them support various CNIs so any favorite CNI can be installed.

Some CNIs (like Calico) require access from the edge node to Kubernetes API.

To run nodes in a different network (like the dynamic public IP address of LTE), konnectivity seems to be a good option.

konnectivity is a server-client architecture, so the konnectivity agent on the edge device connects to the konnectivity server on the controller nodes.

k0s support konnectivity by default for a worker-to-controller communication. (See here)

Also, here is the description of konnectivity from the Kubernetes official documentation. konnectivity can be enabled manually on any Kubernetes cluster.

kubeedge adopts a different kind of networking between controller and worker: cloudhub and edgehub

This is a server-client type of architecture too and it allows to running of nodes in a different network.

Resource and performance

For k0s and k3s, the resource usage is reasonable enough. ~440MB memory usage on worker nodes when idle.

For microk8s, the memory usage is ~ 700MB when idle.

For kubeedge, the memory usage is ~ 300 MB when idle.

This is a result of perf-tests by using the cluster loader script.

Only running simple test cases from getting-started guide. This script creates a deployment and scales to 10 replicas.

| test cases | kubeedge | k0s | k3s | microk8s |

|---|---|---|---|---|

| Start measurements | 0.101133 s | 0.10159 s | 0.101366 s | 0.101339 s |

| Cluster API | 0.000221 s | 0.000172 s | 0.000165s | 0.000239 s |

| Cluster performance | 0.000419 s | 0.000317 s | 0.000313 s | 0.000267 s |

| Create deployment | 1.002712 s | 1.003559 s | 1.001963 s | 1.008023 s |

| Wait for pods to be running | 14.079484 s | 5.013258 s | 5.01466 s | |

| Measure pod startup latency | 0.019507 s | 0.018685 s | 0.029368 s | 0.021512 s |

| test overall (including clean up duration) | 35.261 s | 21.188928 s | 21.231549 s | 21.256 s |

From the result, we did not find many things, but only kubeedge scores some delay in pod deployment.

Customization

Most of the distros allow much variety of choices for CRI, CNI, CSI.

CRI

containerd is supported as default for all 4 distros.

k0s, k3s, microk8s install containerd automatically by default.

cri-o is also supported except by microk8s but some manual steps are required.

They provide enough documentation to enable them.

nvidia-container-runtime can be enabled on k0s and kubeege manually.

microk8s provides an add-on for gpu.

k3s seems not officially support gpu but we can see some workaround on the web to enable it.

CNI

Most of CNIs can be enabled manually.

k0s support Calico or kube-router as default.

k3s support Flannel as default CNI.

microk8s enables Flannel in non-HA-mode and enables Calico in HA-mode.

Kubeege provides edgemesh for default CNI.

CSI

Most of CSIs can be enabled manually.

k0s offers OpenEBS as a part of addons. This allows to leverage host path or device as a volume backend.

k3s support Longhorn (which also originated in Rancher).

HA

High availability configuration can be enabled on all distros.

Documentation and community

For k0s and k3s, documentation is well structured and completed.

Also, the community on Github is active and the release cycle is frequent.

Documentations for microk8s are good too.

kubeedge‘s documentation is a bit hard to follow.

Some pages are stale and do not reflect the latest information.

Summary table

This is the summary table of basic features at this moment (Apr 2022).

| kubeedge | k3s | k0s | microk8s | |

|---|---|---|---|---|

| Deployment costs | complex | simple | simple | simple |

| Configuraion | complex | simple | simple | separated config files |

| Documentation | available but not sufficient | good | good | good |

| Isolated network | cloudhub – edgehub | – | konnectivity | – |

| Idle Resource utilization | ~300MB | ~440MB | ~440MB | ~700MB |

| Performance | a bit slow for pod initializing | fast | fast | fast but slow for stopping the node |

Conclusion

Kubernetes now can be running on the edge devices which have fewer resources. For edge solutions, Kubernetes will help to reduce the management and deployment costs in case we have more and more devices.

For edge use-cases, k0s seems to be the best for its simplicity and features at this moment (Apr 2022). It’s easy to deploy and manage and has only one binary. So even application developers without much Kubernetes experience can operate clusters easily by themselves. k0s allows managing a cluster from the isolated control plane nodes with default konnectivity.

Although the complexity of the operation and the documentation, kubeedge also supports running nodes in a different network and provides many flexible features for edge use-case (device CRD, queuing system, etc). Since it is an extension of native Kubernetes and the control plane is a native Kubernetes cluster, we can easily integrate with existing Kubernetes-based solutions/applications. This requires some Kubernetes engineers and some more work than other solutions like k0s.

Through this test, we can see the community is trying to run more workloads on the edge. We believe this area grows up fast, and new interesting solutions emerge in the coming years.