Scope

With specialised IO heavy applications, certain considerations need to be taken into account to ensure that we make the most of the available processing hardware.

We require a good design of the storage fabric and connectivity to and from the computation nodes to realise this.

Here I share our experience during a recent solution design for a deep learning application using NVIDIA’s DGX-1.

Our Client had the following requirements:

- Fast Read

- Fast Write

- Low Cost

As in many of the emerging machine learning use cases this was an R&D project and the client already had the full data set backed up on an isolated enterprise storage solution. As a result fault tolerance was not a big concern as the raw data can be reloaded from source at any time in case of a failure.

This article goes through some of the considerations taken into account for the design of a commodity hardware based storage cluster.

What is Nvidia DGX-1?

NVIDIA DGX-1

This is a purpose built appliance for deep learning applications.

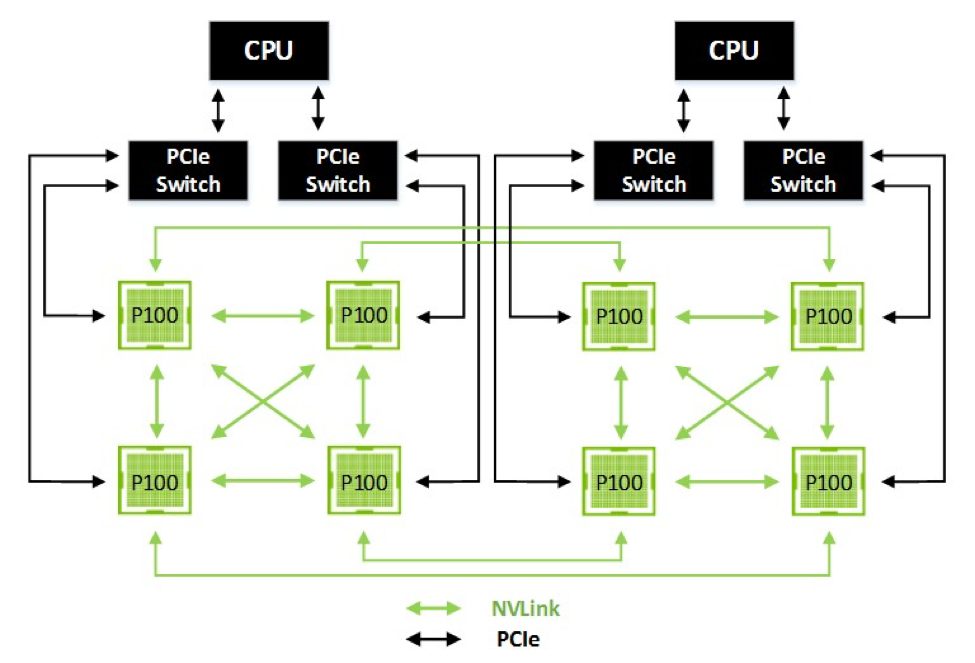

It achieves super-computer like processing power by using multiple Tesla P100 GPUs interconnected by a proprietary NVLink interface.

NVIDIA NVLink

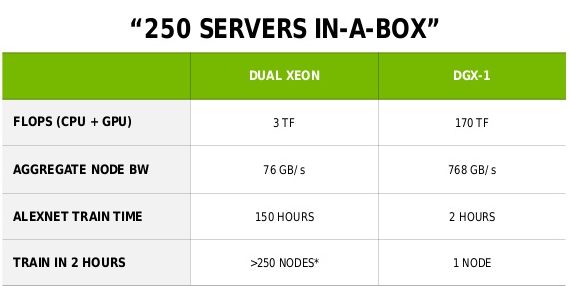

It claims to replace ~250 conventional servers (we believe it!). Its hard to find any off the shelf hardware that can beat its performance in terms of Teraflops in just 3Us of rack space.

DGX-1 vs Dual Xeon Server

Storage Considerations

With 170 teraflops of computing power it is important that the storage solution does not leave those processing cores idle. We try to avoid the situation where the storage is the bottleneck.

The end result is that if we can keep up with the data feed and data sink requirements we will be able to reach results sooner and we can get the most value of the machine learning system.

Faster machine learning results can allow us to run more tests and fine tune algorithms for greater applicability and accuracy.

Commodity Hardware based Storage

What do we mean by Commodity Hardware based Storage?

Commodity Hardware based storage is a purpose built cluster of commodity servers with DAS arranged in RAID arrays for HOT / WARM / COLD storage areas. You can find out more about Hot / Cold / Warm storage here (Avinton Storage Solutions)

We typically use Dell or HP servers but any recent DAS Supporting hardware can be used.

The HOT storage can be for example an SSD array whereas the WARM / COLD storage can be normal 15k rpm SAS drives.

The machines will run customised Linux distributions which provide enterprise storage like features at a lower cost.

Maintenance

On Commodity Hardware the maintenance overhead will be higher than enterprise storage solutions as a result of having more components in the solution.

This can be significantly alleviated by having good monitoring in place with regular housekeeping.

Fault Tolerance

Fault Tolerance is typically not as good as an enterprise storage solution which has multiple levels of inbuilt redundancy. This can be improved by having hot spare disks in the RAID arrays to minimise impact of any disk failures as well as a dedicated backup storage or cluster 1:1 mirroring with software based fail-over.

(The servers will typically be using RAID-6 which can tolerate up to two faulty drives per array but this does not cover memory / raid controller / motherboard / cpu failures.)

Security

Raid controller based full disk encryption can be used to ensure data security albeit with increased latency.

Scalability

Scalability is achieved by adding more servers in the cluster and configuring those in the controlling software.

These solutions scale surprisingly well into the 10s of Petabytes.

Enterprise Storage Solution

If cost is not much of an issue and the use case requires high fault tolerance, security and speed it is best to go with a dedicated enterprise storage solution from vendors like Dell/EMC, Oracle or software defined storage solution like Nutanix etc.

We will not go into the details of those solutions here but some even use proprietary drives to achieve excellent IO speeds and native encryption coupled with various interconnect options from GbE to multiple Infiniband ports.

Storage Fabric Interconnect

The Storage interconnect is just as important as the Storage solution choice itself in the case of high speed data processing. With the DGX-1 this is particularly important since the on board GPUs are able to write data directly to the NIC Buffers over PCIe.

This makes the data sink speed (write) just as important as the data feed speed (read) for our selected storage design as slow downs on either can cause a bottleneck.

Infiniband vs GbE

For a Commodity Based Hardware Storage solution:

Pretty much any 10/40GbE NIC would suffice provided it has a supported driver, Intel and Broadcom are good candidates.

One server would need two dual port NICs to support redundancy and multi-path.

Infiniband is likely to push the price up to the point where it would be worth looking at enterprise storage solution. This is because once you use infiniband you need to factor in all the nodes in the cluster having infiniband NICs, the cabling and the switching gear.

This pushes the price up significantly and one can achieve comparable IO using additional nodes in the storage cluster connected by cheaper 10GbE or 40GbE for a more cost effective solution overall.

NVMe SSD

NVMe SSD drives are significantly faster than other SSDs on the market, however, at the time of writing enterprise NVMe drives would make a DIY Storage solution way too expensive to the point that it wouldn’t be competitive anymore.

It made more sense in our case to use many smaller and cheaper SAS/SATA SSDs arranged in a hot storage pool or cache area and some big 15k rpm spinning disks for warm and cold.

The combination of the high number of small SSDs and multi-path makes the system ideal to support MPP or GPU type processing loads.

Software Defined Storage

Software Defined Storage (SDS) is seen as the future in the storage eco-system.

SDS provides features like automatic data classification and prioritization based on usage or access patterns, automatic defragmentation, optimization, compression, replication.

It is similar to our aforementioned solution in that it can run on Commodity Hardware but with some key differences.

SDS uses a software abstraction layer which abstracts the hardware from the target application.

SDS supports standard and proprietary protocols with extended feature set.

Commodity Hardware based storage provides classic storage services over standard protocols like iSCSI, NFS, SMB, CIFS, etc. and more advanced features like HA, hot-warm-cold pool or multi-path usually have to be setup or implemented manually using open source components.

SDS’s software layer is not free and not open source.

In SDS all advanced features are automated and manageable from a central interface.

All storage actions can be accessed, controlled and automated programmatically via RESTful APIs.

A typical SDS is Nutanix’s Acropolis Distributed Storage Fabric.